by Michael Cropper | May 14, 2016 | Developer, Security, Technical, WordPress |

A web developer or digital agency has built our website, so surely they must have backed it up, right? Probably not. Well ok, we have a web hosting company, and surely they back things up, right? Probably not. Some of the many assumptions that business owners make about their website and backups. I can honestly say that for the average business, your backups are probably woefully inadequate for your needs and should anything go wrong, which again I can guarantee that it will do at some point, you will be left up ‘the’ creek without a paddle.

Going one step further there is no magic ‘backup’ solution, it’s not like buying a lemon from the supermarket. A lemon is a lemon, there is nothing else it can be. Instead, backups are a bit like Apples. You can have many different types of apples, all with their different purposes based on your requirements. You wouldn’t put a cooking apple in a lunch box unless is was baked into apple pie. Likewise you wouldn’t put a custard apple in an apple pie, seriously these things exist and have an interesting taste. I digress.

Back to backups. There are many different types of backup technologies which give you differing levels of security as a business and hence are either easier or harder to restore when something does go wrong. Again, it will go wrong at some point, trust me, it always does, this is technology we’re talking about. With unscrupulous cybercriminals targeting websites running certain technologies at scale, fully automated. Do not think that you are off someone’s radar.

So let’s take a look at a couple of the different website backup technologies and what they both mean. This is by no means a definitive list but hopefully this should get you thinking about what you need to be investing in as a business.

Server Level Backups

Surely my web host runs server level backups? Maybe, but are you paying them to do that? Backups use server resources, CPU, RAM, Hard Drive space and bandwidth on the network, which all cost money to run. Unless you are paying your web hosting company specifically for backups, it is unlikely that they will be running server level backups for you.

Server level backups are great and are essential to have in place for any business. If you are unsure if you have this in place, then contact your web hosting company to check or get in touch and we can have a quick check to see what you have or haven’t got and advise accordingly.

Your server level backups are designed for one thing, restoring the entire server should anything go wrong with the hardware or similar. They are often run daily and stored for a period of time with multiple restoration points for added levels of protection. This is great if you’re on your own dedicated web server with just your own website as this means that restoring a backup can be much faster than if you are on a shared web server of sorts.

If you are on any kind of shared web server, where there is multiple websites hosted on the server, then this is where things get tricky. The likelihood is that if you are on any kind of shared web server or similar, i.e. if you don’t have as a minimum your own Virtual Private Server (VPS), then this applies to you. What this means is that your website is on the same web server as other websites, then should anything go wrong with your individual website, then restoring just this part is much more time consuming and costly for you to do.

You see, the server level backups are designed to protect everything on the web server should anything go wrong at the web server level, they aren’t designed to protect against a single issue on a single website for example if your website was hacked into and deleted. This means that if this did happen, it is not easy to simply restore your individual website as the backups have to be combed through and reinstated which is a fiddly job for the technical team to do and hence costly.

Server level backups are designed for keeping backups of things like any server settings that have been implemented specific to the needs of the websites hosted along with any control panel settings which may be in place. They are designed to be used as a single setup which can then be restored as a whole, not parts of the whole.

So yes, server level backups are extremely important and if you don’t have these in place now, then you need to get these in place.

Website Level Backups

The next type of backup to make sure you have in place is a website level backup. This is where your website setup as a whole, which sits on your web server, is backed up in its entirety. Far too often, the website backup technologies that people have in place are woefully inadequate.

Your website level backups need to be fully automated, so if you have to manually set this running, then this is no good. Your website level backups need to include everything on your website, files and databases to ensure that the data can be easily restored. Your website backups also need to be stored in a remote location, so not on your web server. A backup sitting in the same place as the main system means that when the main system goes down, you have potentially lost your backup too.

WordPress makes the website level backups reasonably straight forward which means that when you invest in WordPress Security & Backups, the backups and security side of things are taken care of for you. This also means that when you have the right website level backups in place, when things do go wrong, as they always do, then restoring this backups is far faster and hence much cheaper for you. Make sure you have adequate levels of website level backups in place suitable for your needs. If you are in any doubt, then get in touch and we’ll happily review your current setup for free and advise accordingly.

Restoring a Backup and Responsibilities

Surely if anything happens it is the responsibility of your web developer, digital agency or web hosting company to restore any kinds of backups for free? No. Restoring any kind of backups takes time to implement, and depending on the level of backups you have chosen to invest in previously, this determines the ultimate cost involved for restoring any backups.

As explained previously, if you are on a shared web hosting environment of any kind, then this is going to cost you a lot more to restore the backups as they have to be unpicked form the whole server level backups and reinstated. Opposed to using website level backups alongside server level backups, these are far easier to restore and hence cheaper for you in the long run.

As a business owner you are responsible if your website is hacked, not the service provider, it will cost you either way. It’s your choice to pay a small amount every month or a large amount when things go pear shaped. We would always recommend regular maintenance, security updates and automated backup technologies being implemented as we have seen time and time again how this saves companies money in the long run.

If you are worried about the level of backups you have in place within your organisation for your website technologies, then get in touch and we’ll review your current setup and recommend relevant solutions that can be implemented.

by Michael Cropper | May 9, 2016 | Developer, Technical, WordPress |

Accelerated Mobile Pages, AMP for short, is an open source project designed to make the web faster. For people accessing web content on mobile devices, speed is a challenge for many users and with over 50% of content accessed on the web via mobile devices, this is more important now than ever.

The concept of Accelerated Mobile Pages is all about stripping out irrelevant styling and fancy JavaScript technologies to make the page load much faster, with the most important aspect, the content, loading virtually instantly.

If you’re interested in the finer details behind the project, have a read all about it here, https://www.ampproject.org/. The technical aspects behind the project are quite significant as are the underlying details about how your web browser loads content as standard.

Accelerated Mobile Pages AMP Speed Test

So we thought we’d put AMP to the test to see just how much faster it really is for WordPress in comparison to a rather bloated website which requires a bit of TLC, like most WordPress websites on the whole. The results below we repeated on the same website multiple times and cannot believe the performance increases we saw. The Accelerated Mobile Pages plugin for WordPress is available for download from the WordPress repository. A note on the plugin at the time of writing, it only supports Posts in WordPress, i.e. your blog posts. Pages and Ecommerce Products aren’t supported currently.

Blog Post Loaded As Normal

Blog Post Loaded with Accelerated Mobile Pages APM Technology

Awesome! Try loading this page you are viewing now as an Accelerated Mobile Page here to see how this looks: https://www.contradodigital.com/2016/05/09/wordpress-accelerated-mobile-pages-amp-speed-test/amp/

As a footnote. Yes, 30 seconds is darn slow for a website to fully load. Yes, tools like Pingdom are not perfect as many users are more interested in the time when the website appears to have completed to load opposed to when the last byte has arrived. And yes, this is only a single site as a comparison. Get involved and give this a go on your own website to see how this performs for you. Every website is significantly different and ever web server has been configured differently based on your individual needs.

by Michael Cropper | May 9, 2016 | Developer, Security, Technical |

Just like the computer that you are reading this blog post on, your web server has a lot of software installed to keep it running. And like all software, it needs to be kept up to date to avoid security issues. Web server security is an enormous topic with many moving parts, many which are often uncomprehendable to the non-teckie.

Seriously though who is updating your web server software?

Your web developer? Unlikely, often web developers have very limited knowledge of the underlying technologies of web servers.

Your web hosting company? Possibly, but unlikely unless you’re paying them to do so.

Your IT team? Unlikely, your IT team is often focused around the computers, laptops and devices around the office and often believe that it is the web developer’s or web hosting company’s job to do this.

As a business owner it is your responsibility to be asking these questions and making sure that you have this part of your cybersecurity looked after. If you don’t know who is looking after this for you, you need to find out. Get in touch if you find out that this is not being looked after, as I suspect is the case for most people reading this blog post. As with all software, it is essential that your web server software is kept up to date to avoid potential cyber attacks.

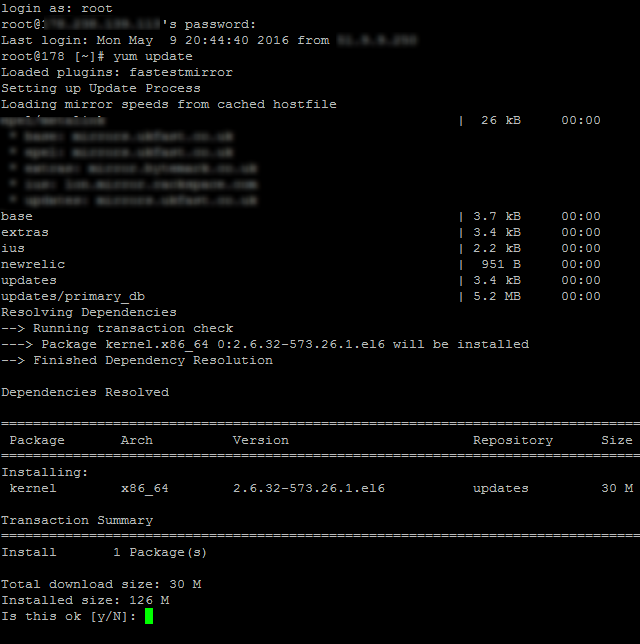

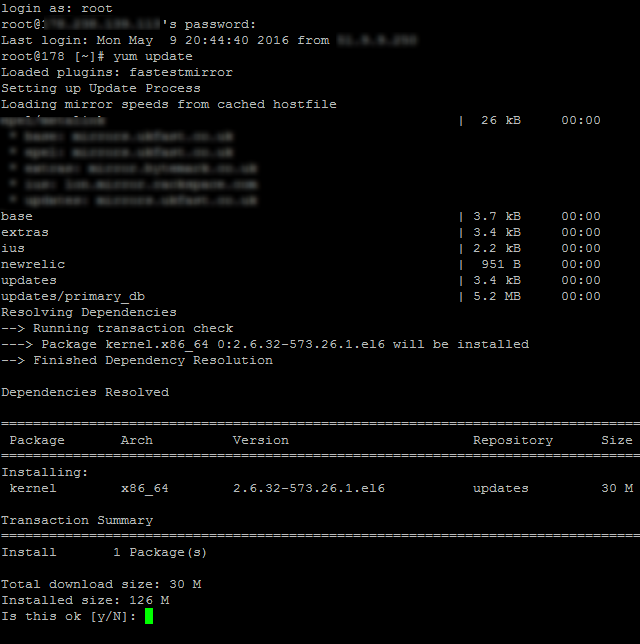

This is what one small part of updating server software actually looks like to the teckies managing this for you, no pretty user interface, it’s primarily command line management;

by Michael Cropper | Apr 9, 2016 | Developer |

When developing basic websites, never need to be able to access your local machine form the internet. Whatever language or platform you are using, you’ll likely have your local development environment at something like http://localhost:8080 or similar. This allows you to test the code you are writing with ease without keep uploading it to your web server which is a more time consuming process.

When you start getting involved with building more advanced web applications, opening up your localhost environment to the internet is such a valuable tool, I can’t stress this enough. Particularly when working with technologies like webhooks, payment gateways and other integrated technologies. This is required because these websites need to send data back to your application outside of the initial request, and they cannot send this data to your local machine without being able to access it. You see, your local machine is protected by multiple firewalls, within your router as a hardware firewall and also with the firewall software running on your machine. So even if the outside website did know your IP address to send the data to, they wouldn’t be able to get through your firewalls anyway.

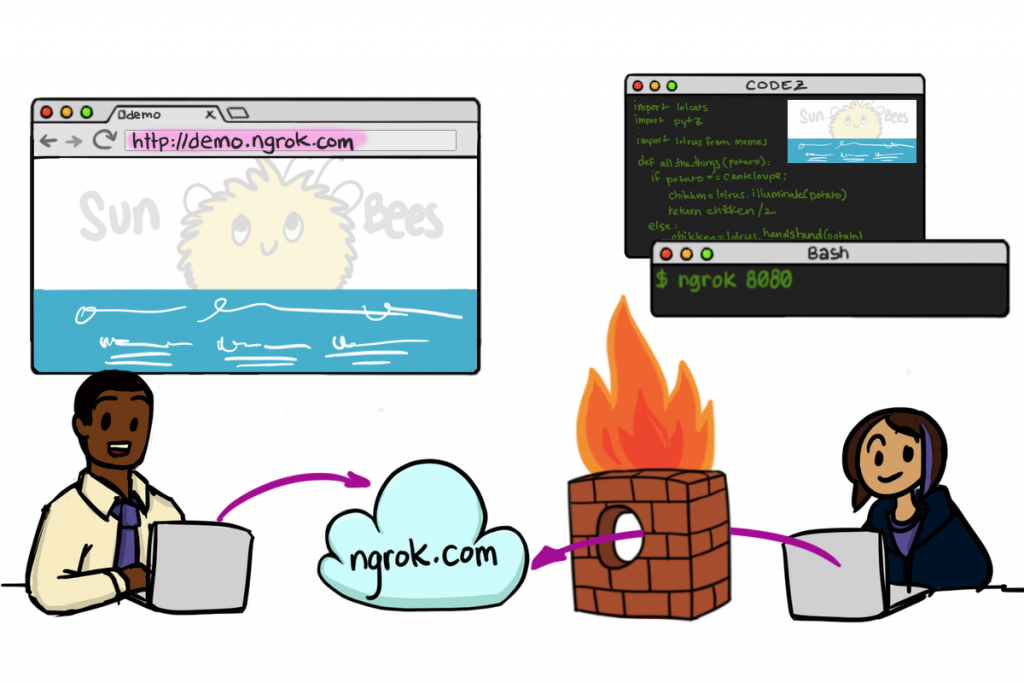

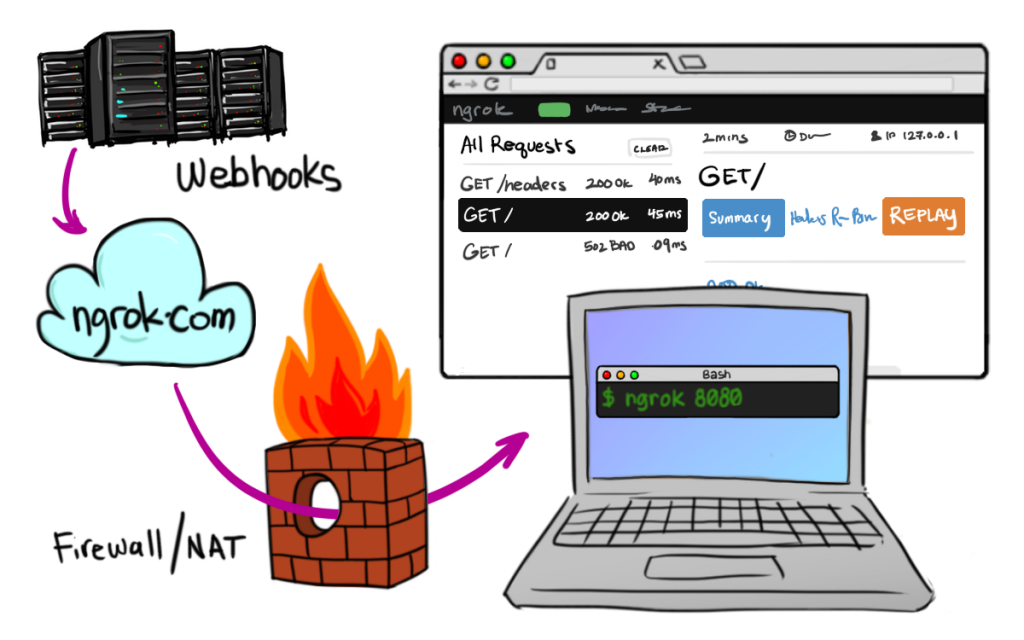

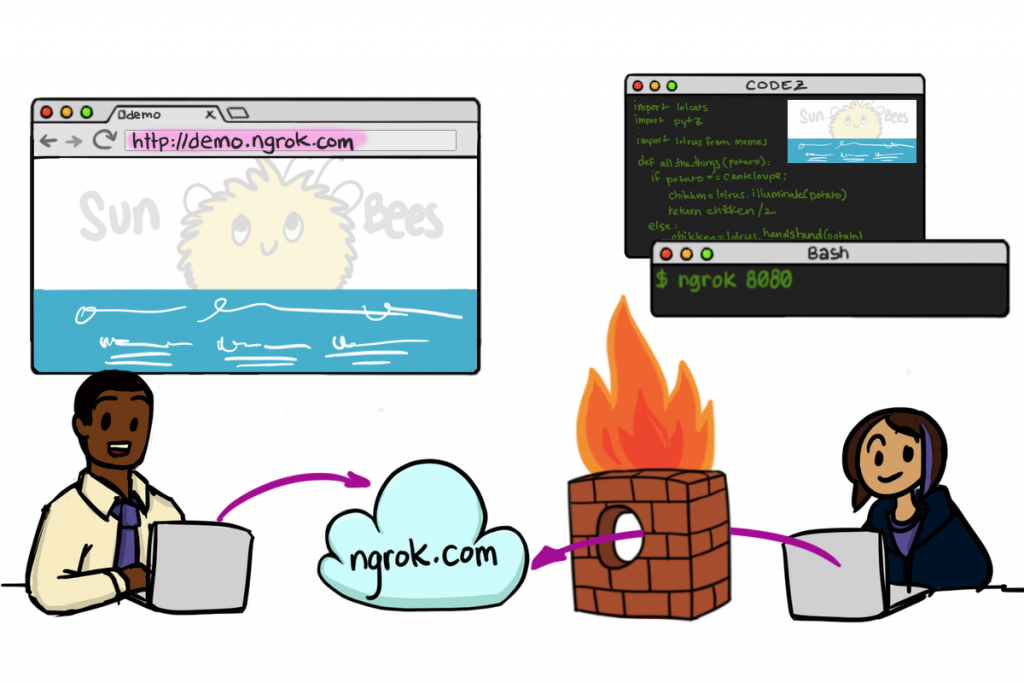

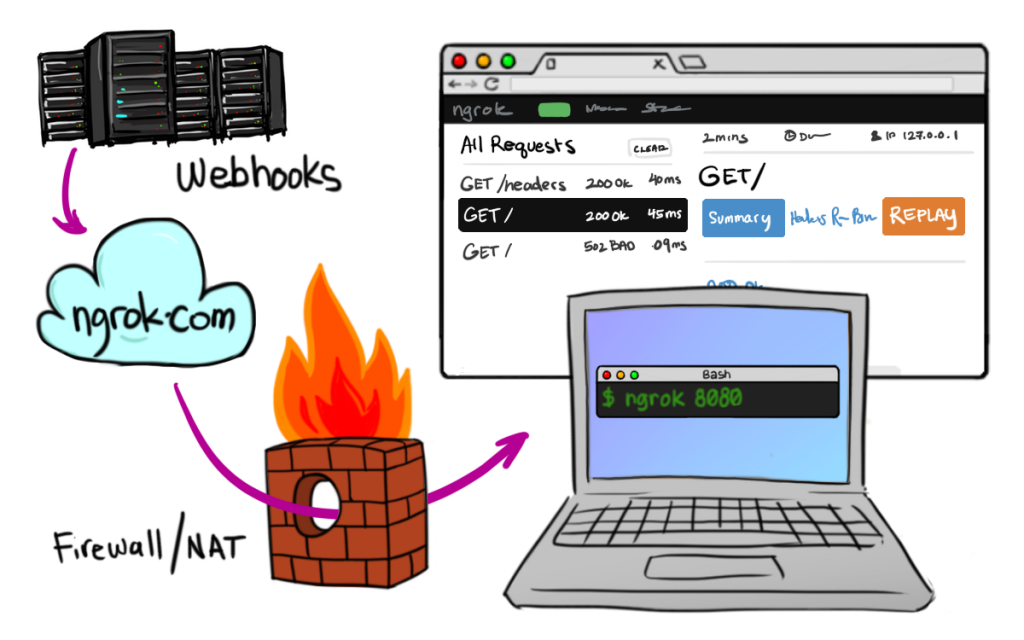

This is why you need to be able to access localhost from the internet which is only possible with specialist software designed to do exactly this. There are several different tools available to allow you to do this, personally I find the ease of use of Ngrok making it the only choice for me. To understand how Ngrok works, a couple of images shown on their website helps explain this in more detail as this is quite a technical topic to get your head around if you haven’t used before;

How to Access Localhost form the Internet with Ngrok

Image source

How to Send Data from Webhooks to Localhost with Ngrok

Image source

Ngrok have a list of platforms that it works on and this can be set up in as little as 30 seconds, it is seriously awesome. So anyone wanting to know how to access localhost from the internet or send webhooks data to your local machine, then take a look at Ngrok. They have free versions of the software and paid subscriptions which are dirt cheap.

by Michael Cropper | Apr 6, 2016 | Developer, WordPress |

The WordPress REST API Version 2 is brand new in the developer world which means that the documentation is extremely limited. Hopefully this can help a little for others trying to debug problems like this.

Posting comments to WordPress via the REST API Version 2 is actually relatively straight forward once you figure out how to do this. For those looking for a quick solution, using POST on the following URL will do just that, post a comment on this blog post you are reading right now.

https://www.contradodigital.com/wp-json/wp/v2/comments?author_name=Your%20Name%20Here&author_email=your-email-address@website-address-here.com&author_name=Your%20Name%20Here&content=Your%20Comment%20Here&post=1604252

Now for a few comments on the technical aspects to understand how this works.

Read the WordPress API documentation under the Create a Comment heading. As you will see, the documentation is minimal to say the least. It’s something the WordPress Core team are working on, so stick with it.

Essentially though, there are various query string parameters you can append to the request to send data into WordPress as a comment. It’s important to note that this is a POST request not a GET request. GET requests on this URL will not work. You need to use a tool such as Advanced REST client which allows you to POST data to API URLs which is extremely handy.

You will no doubt have debugging to do when you are first testing this as nothing ever goes to plan. Make sure you have comments turned on at the global WordPress level under the Settings > Discussions tab and also make sure that you have comments turned on for individual posts as sometimes these have been disabled. It’s always best to show these on your website too.

As with anything comment related with WordPress, make sure you are using the Akismet plugin to block any spam as this is a real nightmare on WordPress without Akismet.

There are lots of extremely useful uses for using this, we’ve been recently using this to post comments from a mobile app into WordPress which is used as a comment moderation system which means we don’t have to go and build that side of the functionality.

Make sure you are escaping the content which is included in the query string too and keep an eye out for any rogue spaces or special characters which may be breaking your POST request if this hasn’t worked. The usual things to check which you often miss J

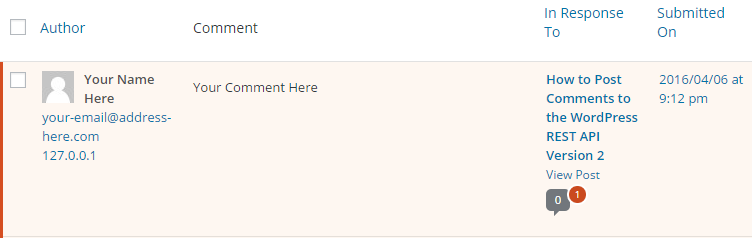

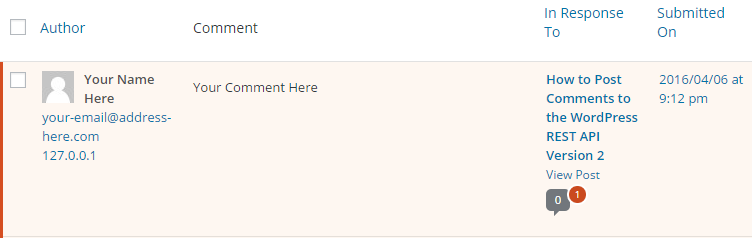

When you successfully post a comment, you will see this waiting in your comment queue;

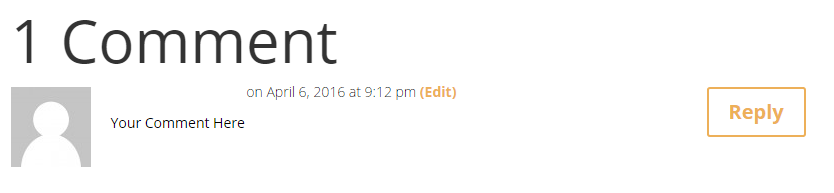

Then when you approve the comment you will see this displays correctly on your website as you would expect;

Have a go yourself. Customise the above URL with your own details and comments within the parameters and I’ll publish any successful comments. This handy URI Encoder / Decoder tool may come in useful when writing a comment or your name if it includes spaces or special characters, something I haven’t got around to migrating over to this site just yet.

by Michael Cropper | Apr 4, 2016 | Developer |

If you have ever worked with websites, web servers, cPanel, WHM and Exchange, you will know that there is never a situation that is ever the same. With default configurations for installations, changing settings and automatic updates, it’s easy for systems to sometimes get tangled in a few knots. Thankfully, there are tools available to debug things to identify specifically what is going on, below is a process which will hopefully save you hours of time investigating issues like this to quickly identify what is happening when your emails are going astray.

Sending & Receiving Emails

Ok, so if we look at the underlying technologies about sending & receiving emails first, this helps to understand where to first look for problems when they do occur. Let’s break this down into the two main categories of IMAP and Exchange as they both behave significantly differently. For Exchange, whether you are running a private Exchange server or whether you are on the Microsoft Hosted Exchange platform, this doesn’t make a huge deal of difference, so this information is going to be generic to the two with the understanding that there are always the odd discrepancies depending on configurations etc.

I’m going to assume that you have already set your MX Records correctly at the DNS. Make sure you have an SPF Record / TXT record with SPF details in if you are sending from IMAP or you will get blacklisted by Microsoft Exchange and will need to un-blacklist yourself.

Microsoft Exchange – Sending & Receiving Emails

Whenever you send emails from name@website.com from Microsoft Exchange, these emails are automatically handled by Exchange itself. Meaning that Microsoft Exchange respects the rules of the internet in relation to the DNS MX Records. So when you send emails to hello@another-website.com, Microsoft Exchange looks up the MX records for another-website.com and simply sends the message on its merry way to be picked up by the mail exchanger. Simple.

Whenever you receive emails into name@website.com, likewise, Microsoft simply looks within the Exchange settings for the website.com domain and decides which account this belongs in, if this is an invalid account or if this needs to be forwarded on to a distribution group or similar. Fairly straight forward.

Overall, Microsoft Exchange does just work and rarely is the problem at Microsoft’s end.

IMAP, cPanel, WHM and Web Servers – Sending & Receiving Emails

In comparison to Microsoft Exchange above which is essentially a single piece of software, sending and receiving emails with IMAP is a whole different ball game. It is one of the reasons why we always recommend companies use Microsoft Exchange, not only because it is a much more powerful system, but because it also costs you more for us to support you with IMAP related issues as they take 10x longer to get to the bottom of. Just use Exchange where possible.

Never the less, many businesses use a mixture of technologies for a variety of reasons, so it’s important to be able to understand how things fit together and most importantly how things can be quickly debugged and tweaked. So let’s look at how these different technologies fit together.

WHM is an administrator piece of software for managing web servers, much like an administrator account on your personal computer which allows you to install software, configure settings, create additional users etc. The difference being is that it allows you ultimate control of functionality through an easy to use interface and most importantly debugging tools for email delivery from and to your web server.

cPanel is designed for individual users and accounts. It is the equivalent of having an administrator account on your home computer (i.e. WHM) and then an account each for Mum, Dad, Son, Daughter (i.e. cPanel accounts). It is designed to allow each user / account to manage their own items on the web server in a safe and secure way without being able to interfere with other people’s data.

IMAP is a technology which transcends both WHM, cPanel and often desktop applications which makes things a little more complex when debugging issues. Domains are assigned to individual cPanel accounts, such as website.com and my-other-website.com. And individual emails for name@website.com are configured within the individual cPanel accounts, even though the IMAP technology means that it is the actual server sending and receiving emails and deciding how to deal with email routing.

Websites also send emails from scripts and code which has been developed which introduces another layer of complexity as from an underlying technology point of view, the settings which are configured mean that emails from websites may or may not be delivered, even if they appear to be sent when you send a message on the contact form on the website for example. Always test your contact forms on a regular basis.

Whenever you receive email to your IMAP email address for name@website.com, ‘the internet’ routes this email from the sender, via the DNS MX record settings, to your web server where your emails live. The software running on your web server then ultimately decides which account this should end up in. Receiving emails is generally ok as long as the accounts are set up.

Whenever you send email from your IMAP email address for name@website.com, this is when things get a little different. This is because there are many technologies interacting together, particularly when you are using a desktop email application to send emails or even a website to send emails. There are other things to consider particularly when web servers, websites and email applications may have been configured a specific way which may in fact mean that emails are actually sent through SMTP opposed to IMAP.

Debugging Email Deliverability Problems

Ok, so now we’ve had a look at the many moving parts behind the scenes when it comes to emails, let’s look at how you go about debugging email deliverability problems and where to start. I’m going to stick with IMAP here as Exchange is an enormous topic on its own and takes quite a bit of learning. Once Exchange is configured, it just works. Get in touch if you are having issues related to Microsoft Exchange configuration and I’m sure we can help walk you through what needs to be done.

DNS Configuration

This has been mentioned before, but it’s worth reiterating. Make sure your DNS is configured correctly and is pointing to the right place it should be. This relates specifically to your MX records, and TXT, A, SPF, or SRV records which may need to be created depending on your email technology in the background. Speak with your web hosting company for the actual settings here.

Review What is Actually Happening

Next, review what is actually happening to try and determine where the problem may lie. Review;

- Can you send emails via webmail?

- Can you receive emails via webmail?

- Can you send emails via your desktop application?

- Can you receive emails via your desktop application?

- Is your website sending emails?

- Are you receiving emails sent by your website?

- Can you send emails to specific domains?

- Can you receive emails from specific domains?

- Can you send emails to specific domains when sending from another specific domain?

- Can you receive emails to a specific domain when sending from another specific domain?

- Can you successfully authenticate with the server when trying to send and receive emails?

- Have you correctly configured your email settings and port settings?

- Have you waited 24 hours for DNS propagation if you have just changed your DNS settings?

- Are there any service interruptions with your web server?

The more you can narrow down exactly what is happening here, the greater the clues are to what is actually going on. Yes, your emails don’t work, but why, what is the specific issue we are facing. Ultimately, when emails are sent, they should be received at their intended destination. When things don’t quite go to plan here, it means that something in the process is doing something different which is generally down to some settings somewhere which need to be tweaked.

Tracking Received Email Deliverability

Receiving emails into IMAP is much easier to debug than sending emails, so it’s always best to start here. If your DNS records are set correctly, then you should be receiving emails by default. As long as you have an email account set up within cPanel, your emails should be arriving successfully. Always check your junk folders and make sure that you web server isn’t automatically junking / deleting emails that it believes are junk. I have seen this happen in the past with over active junk email settings. Likewise, keep an eye out for any email forwarders that may have been set up and are taking priority when the email arrives and sending it off somewhere else before it lands in your inbox.

Generally speaking though, if you aren’t receiving emails it is due to your DNS records being incorrect or the email address you are sending to blocking emails from your server as they look like spam. Make sure you are sending these test emails from an email account that you know already works. It helps to have a few email accounts such as Hotmail / Outlook / Gmail etc. so that you can test this from various sources. If you aren’t receiving emails from your other email accounts that you know are working correctly, then re-check your DNS settings.

Tracking Sent Email Deliverability

Now onto tracking your sent email deliverability issues. This depends on where you are sending emails from in the first place. If you are sending emails from your website and they aren’t being received, this again can be for many reasons. It could be related to how your web server is configured for when it is sending emails to @website.com, it could be trying to deliver your emails locally to an email account hosted on the web server and in which case if you are running your emails remotely through Microsoft Exchange, then you may need to update these settings. It could also be the way in which you are sending emails as many web hosting companies actively turn off specific functionality on web servers which allow code to send emails as a security measure. Speak with your web hosting company about this, it is the only way to know for sure.

If you are having issues sending emails via webmail or via a desktop application, first try to verify that this isn’t an issue which relates to your desktop application communicating with your webserver. Try and aim for a single point of failure to test if this point is failing or not and work your way through each one by one, I’m talking about testing specifically, you should never have a single point of failure for anything in business – if you do this is a whole other discussion to be had. Assuming you have tested this, next step is to look within WHM to see where your emails are actually going when they are being sent from one of the accounts on IMAP. There is debugging tool within WHM under Email > Mail Delivery Reports which allows you to see the email you just sent, where did it end up. Often this relates to an issue with local / remote / automatic mail delivery. Check what is happening here, if the recipient isn’t who you intended, then you need to configure your settings within cPanel accordingly so that cPanel isn’t overriding any settings when it shouldn’t be doing.

Outliers and Things to Remember

The above is a rough guide and doesn’t cover everything you could ever check for. For example, one project we recently worked on had a unique issue which doesn’t crop up very often whereby sent emails were getting lost in the ether due to configuration settings within cPanel which means that only a tiny amount of emails were getting lost, everything else was absolutely fine. The situation was that within cPanel, multiple domains were configured including website1.com and website2.com. One website was running Microsoft Exchange, the other IMAP. Exchange was working perfect. IMAP was working perfect….almost. The issue cropped up when website2.com on IMAP wanted to send emails to website1.com on Exchange. Because both domains were configured under the same cPanel account, MX Entry settings within cPanel meant that emails sent from website2.com on IMAP were attempted to being delivered locally due the website1.com domain having an automatic configuration in place which decided where emails should be delivered. Changing this to a Remote location resolved this issue, meaning that cPanel no longer overwrote the default behaviour of the DNS and things continued to function normally.

There are always things to bear in mind and check.

Summary

When using older technology such as IMAP instead of the far superior Microsoft Exchange, debugging email deliverability issues can be tough if you don’t know your way around your web server, the software that is running and particularly on cheap web hosting packages where you may not even have access to many of the tools outlined above. It is so important to make sure you have good web hosting in place for your business to allow you to debug problems like this quickly and continue working away. The last thing you want is weeks of delays trying to get to the bottom of these types of issues as you could be missing vital orders and customer queries.

Should you need any support managing your email technologies, get in touch and we can happily support you in this area. The reality is that all web servers are configured differently, have many technologies running and various settings based on your individual requirements. Cheaper web hosting is more of a challenge to debug as you simply often don’t have access to the tools you need and can only deal with the issues by speaking with the web hosting company yourself or letting someone like ourselves do this on your behalf so they don’t try and bamboozle you with technical jargon with the aim of fobbing you off. It’s always good to work with companies who understand these technologies inside and out. Get in touch for more information.

by Michael Cropper | Apr 3, 2016 | Developer, Retrospective |

Rarely a day goes by without a new project with a new set of requirements, legacy systems and challenges which need to be overcome. This was one of these projects, where one of the many popular solutions we work through simply wasn’t going to meet the requirements on this occasion. I’ll leave the full reasons behind this story for a case study once we write this up. For now, to keep things simple we had to migrate around 250,000 emails from an old web server running IMAP technology and split these between two new places, the most used email accounts to run on the recommended Microsoft Exchange platform and the other email addresses to run on the older IMAP technology. There are many reasons why this had to be done, but I’ll skip over these for now.

So how exactly do you go about migrating 250,000 emails without losing access to anyones account, without any emails going astray during the migration and without losing any of the emails from the old system? Well, it requires quite a lot of planning, clear communication and most importantly a strategy that is going to work within the situation. This guide is for anyone else experiencing a large email migration project where it is essential everything continues running at all times. Did we get is 100% perfect, not quite. I’ve yet to experience any IT or software project be 100% perfect, but I’d like to think we did this with 99.5% perfection given the restrictions we were working with. This guide is going to cut straight to the chase and explain what the key stages of this process you need to go through to get ever closer to the perfect migration system.

Technologies

If you aren’t familiar with the differences between IMAP and Microsoft Exchange, then have a good read through our guide on the topic to get familiar. In short, when needing full traceability and efficient working with email technologies from anywhere in the world and on any device, quite simply you need to be using Microsoft Exchange. IMAP is not suitable for running a large organisation for a multitude of reasons.

With IMAP being the less advanced technology, this ultimately means that there are things that it doesn’t do as efficiently as Microsoft Exchange and most importantly, IMAP isn’t actually a piece of software per say. It is actually a protocol, a set of rules for how things are handled. What this means is that while there are a set of rules for how things are handled, as the protocol is implemented by software running on a web server, this in itself can lead to a whole host of problems depending on the how well the web server has been set up and configured, or how badly. As such, it is difficult to predict with 100% accuracy exactly what is going to be needed without actually jumping in and getting on with it. This being said, you can certainly improve your odds by working through a structured process to maximise the efficiencies within the process.

Requirements Gathering

This has to be one of the most important aspects of any project. Before you jump in and start recommending any solutions, you need to fully understand the situation that is in place at the minute, the issues with this set up and the ideal functionality that is required within the organisation. Spend time talking to as many people as possible to understand the challenges they face and most importantly explaining the variety of options and their benefits. Once you have a full list of requirements you can then begin to plan the best solution.

Planning

With your set of requirements in place, you can review the options that are available to you and decide upon the best solution. There is no one-size fits all when it comes to technology, so make sure you are taking advice from a reputable company, it will save you a fortune in the long term. Or if you are the company implementing this process for someone else, make sure you don’t bite off more than you can chew, project like this can go really bad really quickly if you aren’t 100% sure what you’re doing.

In this specific example, our planning stage was a little more complex due to splitting all of the old IMAP emails between a new IMAP destination and Microsoft Exchange. This in itself required a change in email addresses for some of the users as you cannot use multiple technologies for emails on a single domain such as @example.com. In this case we had to set up a sub-domain for something.example.com which meant that the @example.com domain names could be powered by the superior Microsoft Exchange technology while the @something.example.com email addresses could be powered by the older IMAP technology.

To achieve this requires good web hosting and DNS management in the background. When you are on cheaper budget systems, you often cannot set up sub-domain delegation for MX records which is required to do this. If you aren’t sure what an MX record is, essentially this is a single piece of information which is used by ‘the internet’ which means that when an email is sent to hello@example.com, ‘the internet’ checks the MX record associated with example.com to see where it should forward the email onto. This is generally either Microsoft Exchange or an IMAP server somewhere, generally the one running on your web server at a specific port. As we were using two different technologies in the background, this meant that we needed to have two MX records which is not possible on a single domain, hence needing to set up an MX record at the sub-domain level too.

A key part of the planning process is communication which brings me on to the next section, before we look at the nitty gritty of how to actually migrate 250,000 emails with zero downtime.

Communication

Throughout any project, success or failure is generally down to lack of communication in one way or another. Migrating 250,000 emails is no different. Particularly when email is generally the most efficient way of speaking to people, you cannot rely on this should anything go wrong. In other words, you need another form of communication as a backup should things go pear shaped for whatever reason. Whether another email account on a 3rd party platform or the good old telephone.

As the technical person implementing this, the process behind migrating the large number of emails requires this information to be communicated to the key stake holders in a non-technical and easily understandable way – which can always be a challenge when working with naturally very technical aspects. So whatever you do, make sure you are over communicating with everything you are doing.

Implementation

So, let’s get down to the nitty gritty for how to migrate 250,000 emails with zero downtime.

Old Web Server Review

You need to know what you are starting with. Generally speaking, when you are migrating web servers for whatever reason, it is usually due to the old web server being a bit rubbish in whatever form. This often is coupled with a lack of flexibility that you are used to when working with industry leading technology. You must fully review the old web server, every aspect and speak to the key stake holders who have had anything to do with managing this in the past to fully understand any interesting configurations that may have been set up in the past which can be easily missed.

We’ve already covered about how to migrate a web server seamlessly with zero downtime, so have a read of that if you need more information on the topic. For now we’re going to look specifically at the email side of things. Remember, we wanted to migrate 250,000 emails from IMAP, half to Microsoft Exchange and half to the new web server which was also running IMAP. This required two significantly different approaches to achieving this task.

Prepare the DNS

First things first, we would always recommend migrating things in stages. So let’s assume that you are first going to migrate the contents of the web server, namely your websites, then secondly migrate your emails. Or vice versa. To do this requires you to prepare your DNS settings accordingly.

As DNS propagation can take up to 24 hrs to complete fully, you need to plan this step in advance. With good DNS providers, this is generally extremely quick, although do not count on this, plan in advance.

In our example, we were also migrating the DNS settings which were previous set at the old web hosting company. Instead we moved these to an independent setup, meaning that we could prepare these in advance so propagation could take place throughout the system prior to us changing nameservers at the registrar.

Registrar – Changing Nameservers

Once we had successfully added the DNS settings at the new DNS provider so that the website would run off the new web server and the emails would continue running off the old web server, we were set to go with changing the nameservers. Once we made this change, this meant that we could be confident that and downtime was kept to a minimum.

Ok, so now the first process had been completed, this meant that we could move onto the emails. To do so requires more planning and careful implementation.

Preparing Microsoft Exchange

If you have ever set up Microsoft Exchange before you will know that there are a few settings to configure at the DNS level. To configure Microsoft Exchange in preparation for when we needed it, we actually had to add some of the DNS settings on at the old web hosting company prior to switching the nameservers. This is required to give you the time to authorise your account along with configuring all of the relevant mailboxes and communicating with the individual users for the project.

This guide is not designed to look at how to configure Microsoft Exchange, so if you don’t know how to do that, get in touch and I’m sure we can help you in some way with what you’re working on. For now, assume that a significant amount of time was taken to get everything configured correctly. Interestingly, as part of this specific project, we had to hunt down a rogue Microsoft Exchange account that had been created many moons ago by someone within the organisation with zero information about what had been set up and why and most importantly who owned this. As the Microsoft Exchange system is extremely secure, there are safeguards in place to prevent someone else taking control of an Exchange domain if one has already been set up, meaning that we first had to hunt this down and regain control of this. I certainly hope you never have to do this as I can’t tell you how time consuming this is to do.

Ok, so now you have Exchange all prepared for your email migration project. Next is preparing the IMAP email accounts on your new web server.

Preparing the new IMAP email addresses

First things first, you need to create all of the accounts on the new web server for all of the email addresses which are going to be using IMAP. Make sure you do an audit up front on the old web server to understand how large the individual email accounts are. Often when you create an email account with a default size of say 1GB on IMAP, then for certain users this may not be enough space. You want to plan for this in advance as you do not want to find this out when you are half way through transferring emails only to have to start again.

Thankfully on good web server control panels such as cPanel, doing this initial research is simple and relatively quick. Unfortunately on poor control panels which often come with budget web hosting packages, finding this out before hand can be a little challenging and in some cases simply just not possible which is a little annoying.

Switching the New Email Addresses On

Now everything has been prepared with regards to your new email technologies, it’s time to switch the DNS settings to point all the email related activities to the correct sources. Make sure you don’t make any mistakes when doing this as everything will start to go wrong and emails could go astray.

Migrating 250,000 Emails

Ok, so now we have everything prepared, we’ve switched the emails on but there is nothing there other than emails which are now sent from this point forward. Jumping back a little, it is actually possible to migrate the emails to Microsoft Exchange when configuring all of the accounts. There is a handy tool within Microsoft Exchange which isn’t 100% but can often connect to your old mail server and import the IMAP emails into Exchange which is a different protocol. When this works, this is perfect as you now have a system whereby all of the sent and received emails along with relevant folder structures that people use within their emails has been successfully ported over to Exchange. I’d recommend doing this section prior to switching your new emails on because it can take a while, as in days. So set this running and be patient. The larger number of mail boxes you are dealing with, the longer this will take. When migrating in batches, migrating around 20 mailboxes from IMAP to Exchange took around 8 hours to put this into perspective.

Now, as mentioned before, IMAP isn’t as good as Exchange when it comes to the underlying technology. Meaning that while there is a handy little tool that comes with Microsoft Exchange to do this task, the same is not true for IMAP. As IMAP runs of your web server, there are endless different configurations, setups and options which come into play. Meaning that you’re going to have to figure this bit out for yourself to a certain extent with a lot of testing various options.

Thankfully there is a seriously awesome tool called IMAPSync, which is designed to help with a lot of this process. At only £100 it is worth its weight in gold and anyone attempting to migrate any amount of emails between web servers should purchase this tool. The developer behind the scenes who built the tool is also extremely useful when helping with the usual configuration niggles that you face with things like this.

I’m not going to cover how to use this tool in detail as this is going to differ depending on your individual requirements. Suffice to say, that with a bit of testing for various configurations etc. you will get there in the end. Make sure you test things first on a test account on your new IMAP web server though so you can be confident that this is working as expected. The last thing you want is to be all gung-ho and start everything running only to find you did something wrong.

As a quick overview of IMAPSync, this tool requires you to install the software from the Yum repositories, upload a tarball to the web server under the Root account via SSH on the new web server, unzip this zipped folder, test a few configurations to make sure the scripts can actually access the internet and then you’re off to go. Something to bear in mind is that if you are having trouble getting the script to connect to the internet, it’s likely due to the firewall running on your web server, either physical or software based, which is blocking specific ports on the outbound side, generally port 143 for IMAP.

So there you go, once you’ve managed to run through this entire process, you’ll be in a situation where all of your IMAP emails are now also migrated to the new web server.

Sender Policy Framework

Something to bear in mind is that when setting up a IMAP emails, you will likely be blocked as spam if you don’t have SPF records set up at the DNS level. Leading technologies such as Microsoft Exchange will block emails from domains who don’t authenticate their self through SPF records by default as many of these emails are automatically generated spam. Something to bear in mind if you start getting issues with deliverability to certain email accounts or domains

Summary

We deal with email migration on a regular basis and you often can’t quantify the project before you get involved, it is extremely difficult to predict. Overall though, what I can say is that if you are going through a similar project, you need to be planning things effectively and implementing the right technologies to make sure everything works. For larger organisations, losing access to their emails for even an hour is a lifetime. With a well thought out strategy, detailed requirements gathering and planning your implementation will be much more straight forward. Follow a similar process when you are migrating emails and you too will be able to migrate a significant amount of emails with virtually zero downtime.

The only other thing I would finish with is that it is best if you can implement some of the critical items such as DNS changes at times which are less likely to have an impact. For example late in the evenings or at weekends. It’s not ideal for the person implementing this, although the benefits to the organisation you are working with is enormous, it will result in a situation where emails don’t end up getting lost in the ether while DNS propagation is taking place. It is also recommended to keep your users informed at all stages and most importantly tell them when not to send emails when you are making DNS changes, users often work at all hours of the day when you may be expecting them not to be, so keep in touch with people throughout.

by Michael Cropper | Mar 23, 2016 | Developer, Security, Technical, Thoughts |

If it ain’t broke, don’t fix it, right? Wrong. This is the belief system of inexperienced software developers and businesses owners who are, in some cases rightfully so, worried about what problems any changes may cause. The reality though is that as a business owners, development manager or other, you need to make sure every aspect of software you are running is up to date at all times. Any outdated technologies in use can cause problems, likely have known vulnerabilities and security issues and will ultimately result in a situation whereby you are afraid of making any changes for fear of the entire system imploding on itself.

Ok, now I’ve got that out of the way, I assume you are now also of the belief system that all software needs to be kept up to date at all times without exception. If you are not convinced, then as a developer you need to come to terms with this quickly, or as a business owner you have not been in a situation yet which has resulted in a laissez-faire attitude to software updates costing you tens of thousands of pounds to remedy, much more expensive than pro-active updates and regular maintenance.

The biggest challenge software developers face at the coal face of the build process is the inherent unpredictability of software development. As a business owner or user of a software application, whether that is an online portal of some form, a web application, a mobile app, a website or something in between, what you see as a user is the absolute tip of the iceberg, the icing on the cake and this can paint completely false pictures about the underlying technology.

Anyone who has heard me speak on software development and technologies in the past will have no doubt heard my usual passionate exchange of words along the lines of using the right technology, investing seriously and stop trying to scrimp on costs. The reason I am always talking to businesses about this is not as part of a sales process designed to fleece someone of their hard earned money. No. The reason I talk passionately about this is because when businesses take on board the advice, this saves them a significant amount of money in the long run.

To build leading software products that your business relies on for revenue you need to be using the best underlying technology possible. And here lies the challenge. Any software product or online platform is built using a plethora of individual technologies that are working together seamlessly – at least in well-built systems. Below is just a very small sample of the individual technologies, frameworks, methodologies and systems that are often working together in the background to make a software product function as you experience as the end user.

What this means though is that when something goes wrong, this often starts a chain reaction which impacts the entire system. This is the point when a user often likes to point out that “It doesn’t work” or “This {insert feature here} is broke”. And the reason something doesn’t work is often related to either a poor choice of technology in the first place or some form of incompatibility or conflict between different technologies.

To put this into a metaphor that is easier to digest, imagine an Olympic relay team. Now instead of having 4 people in this team, there were 30-50 people in this team. The team in this analogy is the software with the individual people being the pieces of software and technologies that make the software work. Now picture this. An outdated piece of technology as part of the team is the equivalent of having an athlete from 20 years ago who was once top of their game, but hasn’t trained in the last 20 years. They have put on a lot of weight, their fitness level is virtually zero and they can’t integrate as part of the team and generally have no idea what they should be doing. This is the same thing that happens with technologies. Ask yourself the question, would you really want this person as part of the team if you were relying on them to make your team the winning team at the Olympics? The answer is no. The same applies with software development.

Now to take the analogy one step further. Imagine that every member of the relay team is at a completely different level of fitness and experience. Each member of the team is interacting with different parts of the system, but often not all of the system at once. What this means is that when one of the athletes decides to seriously up their game and improve their performance, i.e. a piece of technology gets updated as part of the system, then this impacts other parts of the system in different ways. The reality of software development is that nothing is as linear as a relay team where person 1 passes the baton to person 2 and so on. The reality of software development is that often various pieces of technology will impact on many other pieces of technology and vice versa and often in a way that you cannot predict until an issue crops up.

So bringing this analogy back to software development in the real world. What this means is that when a new update comes out, for example, an update to the core Apple iOS operating system, for which all mobile applications rely on, then this update can cause problems if a key piece of technology is no longer supported for whatever reason. This seemingly small update from version 9.0 to 9.2 for example could actually result in a catastrophic failure which needs to be rectified for the mobile application to continue to work.

Here lies the challenge. As a business owner, IT manager or software developer, you have a choice. To update or not to update. To update leads you down the path of short term pain and costs to enhance the application with the long term benefits of a completely up to date piece of software. To hold off on updating leads you down the path of short term gain of not having to update anything with the long term implications being that over time as more and more technologies are updated your application is getting left in the digital dark ages, meaning that what would have been a simple upgrade previously has now resulted in a situation whereby a full or major rebuild of the application is the only way to go forward to bring the application back into the modern world. Remember the film Demolition Man with Wesley Snipes and Sylvester Stallone, when they stayed frozen in time for 36 years and how much the world had changed around them? If you haven’t seen the film, go and watch the 1993 classic, it will be a well spent 1 hr 55 mins of your time.

The number of interconnecting pieces powering any software product in the background is enormous and without serious planned maintenance and improvements things will start to go wrong, seemingly randomly, but in fact being caused by some form of automatic update somewhere along the lines. Just imagine a children’s playground at school if this was left unattended for 12 months with no form of teacher around to keep everything under control. The results would be utter chaos within no time. This is your software project. As a business owner, IT manager or software developer you need a conductor behind your software projects to ensure they are continually maintained and continue to function as you expect. It is a system and just like all systems of nature, they tend to prefer to eventually lead towards a system of chaos rather than order. There is a special branch of mathematics called Chaos Theory which talks about this in great depth should you wish to read into the topic.

As a final summary about the inherent unpredictability of software development. Everything needs to be kept up to date and a continual improvement process and development plan is essential that your software doesn’t get left behind. A stagnant software product in an ever changing digital world soon becomes out of date and needs a significant overhaul. What this does also lead to is the highly unpredictable topic of timelines and deliverables when dealing with so many unknown, unplanned and unpredictable changes that will be required as a continual series of improvements are worked through. What I can say is that any form of continual improvement is always far better than sitting back and leaving a system to work away. For any business owner who is reading this, when a software project is delayed, this is generally why. The world of software development is an ever moving and unpredictable goal post which requires your understanding. Good things come to those who wait.

by Michael Cropper | Feb 13, 2016 | Developer |

To set the scene. Firstly, you should never be hosting emails on your web server, it is extremely bad practice these days for a variety of reasons, read the following for why; Really Simple Guide to Business Email Addresses, Really Simple Guide to Web Servers, Really Simply Guide to Web Server Security, The Importance of Decoupling Your Digital Services and most importantly, just use Microsoft Exchange for your email system.

Ok, so now we’ve got that out of the way, let’s look at some of the other practicalities of web application development. Most, if not all, web applications require you to send emails in some form to a user. Whether this is a subscriber, a member, an administrator or someone else. Which is why it is important to make sure these emails are being received in the best possible way by those people and not getting missed in the junk folder, or worse, automatically deleted which some email systems actually do.

Sending emails from your web application is not always the most straight forward task and hugely depends on your web server configuration, the underlying technology and more. So we aren’t going to cover how to do this within this blog post, instead we are going to assume that you have managed to implement this and are now stuck wondering why your emails are ending up in your and your customers junk folders. The answer to this is often simple, it’s because your email looks like spam, even though it isn’t.

Introducing Sender Policy Framework (SPF Records)

The Sender Policy Framework is an open standard which specifies a technical method to prevent sender address forgery. To put this into perspective, it is unbelievable simple to send a spoof email from your.name@your-website.com. Sending spoof emails was something I was playing around with my friends when still in high school at aged 14, sending spoof emails to each other for a bit of fun and a joke.

Because it is so simple to send spoof emails, most email providers (Microsoft Exchange, Hotmail, Outlook, Gmail, Yahoo etc.) will often automatically classify emails as spam if they aren’t identified as a legitimate email. A legitimate email in this sense is an email that has been sent from a web server which is handling the Mail Exchanger technologies.

To step back a little. The Mail Exchanger, is a record which is set at the DNS level which is referred to as the MX record. What this record does is that whenever someone sends an email to your.name@your-website.com, the underlying technologies of the internet route this email to the server which is handling your emails, the server which is identified within your MX Record at your DNS. The server then does what it needs to do when receiving this email so you can view it.

For example, when hosting your email on your web server with IMAP (as explained before, you shouldn’t be doing this), your MX record will likely be set to something along the lines of ‘mail.your-domain.com’. When using Microsoft Exchange, your MX record will be something along the lines of ‘your-domain-com.mail.protection.outlook.com’.

What this means is that if an email is sent from @your-domain.com which hasn’t come from one of the IP addresses attached to your-domain-com.mail.protection.outlook.com, then this looks to have been sent by someone who is not authorised to send emails from your domain name, and hence why this email will then end up in the spam box.

With Microsoft Exchange specifically to use this as an example, you will also have an additional SPF record attached to your DNS as a TXT type which will look something along the lines of, ‘v=spf1 include:spf.protection.outlook.com -all’, which translates as, the IP addresses associated with the domain spf.protection.outlook.com are allowed to send emails from the @your-domain.com email address, and deny everything else.

This is perfect for standard use, but doesn’t work so well when trying to send emails from a web application. Which is why we need to configure the DNS records to make the web server which is sending emails from your web application a valid sender too.

How to Configure your DNS with SPF Records

To do this, we simply add the IP address of your web server into the DNS TXT SPF record as follows;

v=spf1 include:spf.protection.outlook.com ip4:123.456.123.456 –all’

Your records will likely be different, so please don’t just copy and paste the above into your DNS. Make sure you adapt this to your individual needs. That is it. Now when your web application sends emails to your users, they will arrive in their main inbox instead of their junk folder. Simple.

A few handy tools for diagnosing DNS propagation along with SPF testing include;

by Michael Cropper | Feb 7, 2016 | Developer |

Quick reference guide for how to implement. Let’s Encrypt is a new free certificate authority, allowing anyone and everyone to encrypt communications between users and the web server with ease. For many businesses, cost is always a concern, so saving several hundred pounds for a basic SSL Certificate often means that most websites aren’t encrypted. This no longer needs to be the case and it would be recommended to implement SSL certificates on every website. Yes…we’re working on getting around to it on ours 🙂

We recently implemented Let’s Encrypt on a new project, Tendo Jobs and I was quite surprised how relatively straight forward this was to do. It wasn’t a completely painless experience, but it was reasonably straight forward. For someone who manages a good number of websites, the cost savings annually by implementing Let’s Encrypt on all websites that we manage and are involved with is enormous. Looking forward to getting this implemented on more websites.

Disclaimer as always, make sure you know what you’re doing before jumping in and just following these guidelines below. Every web server setup and configuration is completely different. So what is outlined below may or may not work for you, but hopefully either way this will give you a guide to be able to adjust accordingly for your own web server.

How to Set up Lets Encrypt

So, let’s get straight into this.

- Reference: https://community.letsencrypt.org/t/quick-start-guide/1631

- Run command, yum install epel-release, to install the EPEL Package, http://fedoraproject.org/wiki/EPEL. Extra Packages for Enterprise Linux, lots of extra goodies, some of which are required.

- Run command, sudo yum install git-all, to install GIT, https://git-scm.com/book/en/v2/Getting-Started-Installing-Git

- Clone the GIT repository for Let’s Encrypt with the command, git clone https://github.com/letsencrypt/letsencrypt, http://letsencrypt.readthedocs.org/en/latest/using.html#id22

- For cPanel servers, need to run a separate script, hence the next few steps

- Install Mercurial with the command, yum install mercurial, http://webplay.pro/linux/how-to-install-mercurial-on-centos.html. This is Mercurial, https://www.mercurial-scm.org/

- Run the install script command, hg clone https://bitbucket.org/webstandardcss/lets-encrypt-for-cpanel-centos-6.x /usr/local/sbin/letsencrypt && ln -s /usr/local/sbin/letsencrypt/letsencrypt-cpanel* /usr/local/sbin/ && /usr/local/sbin/letsencrypt/letsencrypt-cpanel-install.sh, https://bitbucket.org/webstandardcss/lets-encrypt-for-cpanel-centos-6.x

- Run the command to verify the details have been installed correctly, ls -ald /usr/local/sbin/letsencrypt* /root/{installssl.pl,letsencrypt} /etc/letsencrypt/live/bundle.txt /usr/local/sbin/userdomains && head -n12 /etc/letsencrypt/live/bundle.txt /root/installssl.pl /usr/local/sbin/userdomains && echo “You can check these files and directory listings to ensure that Let’s Encrypt is successfully installed.”

- Generate an SSL certificate with the commands;

- cd /root/letsencrypt

- ./letsencrypt-auto –text –agree-tos –michael.cropper@contradodigital.com certonly –renew-by-default –webroot –webroot-path /home/{YOUR ACCOUNT HERE}/public_html/ -d tendojobs.com -d www.tendojobs.com

- Note: Make sure you change the domains in the above, your email address and the {YOUR ACCOUNT HERE} would be replaced with /yourusername/ without the brackets.

- Reference: https://forums.cpanel.net/threads/how-to-installing-ssl-from-lets-encrypt.513621/

- Run the script with the commands;

- cd /root/

- chmod +x installssl.pl

- ./installssl.pl tendojobs.com

- Again, change your domain name above

- Set up a CRON Job within cPanel as follows, which runs every 2 months;

- 0 0 */60 * * /root/.local/share/letsencrypt/bin/letsencrypt –text certonly –renew-by-default –webroot –webroot-path /home/{YOUR ACCOUNT HERE}/public_html/ -d tendojobs.com -d www.tendojobs.com; /root/installssl.pl tendojobs.com

- For reference, The SSL certificate is placed in /etc/letsencrypt/live/bundle.txt when installing Let’s Encrypt.

- Done!

Note on adding CRON job to cPanel, this is within cPanel WHM, not a cPanel user account. cPanel user accounts don’t have root privileges so a CRON job from within here won’t work. To edit the CRON job at the root level, first SSH into your server, then run the following command to edit the main CRON job file;

crontab -e

Add the CRON job details to this file at the bottom. Save the file. Then restart the CRON deamon with the following command;

service crond restart

It is recommended to have a 2 month renewal time at first as this gives you 4 weeks to sort this out before your certificate expires. Thankfully you should receive an email from your CRON service if this happens and you will also receive an email from Let’s Encrypt when the certificate is about to expire so there are double safe guards in place to do this.

On-Going Automatic Renewal & Manually SSL Certificate Installation

Important to note that when you automatically renew your Let’s Encrypt certificates, they won’t be automatically installed. The installssl.pl script doesn’t seem to handle the installation of the certificate. Instead, you may need to update the renewed certificates within the user cPanel account for the domain manually. To do this, open cPanel and view the SSL/TSL settings page, update the currently installed (and about to expire) SSL certificate and enter in the new details. The details for the new certificate will need to be obtained via logging into the ROOT server via SSH and viewing the updated SSL certificate details in the folder, /etc/letsencrypt/live/yourdomain.com where you can use the command pico cert.pem and pico privkey.pem to view the details you need to copy over to cPanel. It’s decoding the SSL certificates in these two files to make sure the dates have been updated, you can use a tool such as an SSL Certificate Decoder to decode the certificate. If the certificate is still showing the old details, then you may need to run the command letsencrypt-auto renew which will update the certificates.

Hope this is useful for your setup. Any questions, leave a comment.